Are you ready to uncover the fascinating world of probability in statistics? In this article, we will explore the intricacies of GCSE Maths and how it relates to Algebra, Geometry, Trigonometry, and other mathematical concepts. Get ready to enhance your revision tips, exam techniques, and study strategies to conquer the subject. We’ll also provide you with valuable resources, practice questions, and past papers to perfect your problem-solving skills. With our guidance, you’ll be able to master probability with confidence and overcome any exam anxiety that may come your way. Let’s embark on this exciting journey of learning and discover the power of probability!

Definition of Probability

The concept of probability

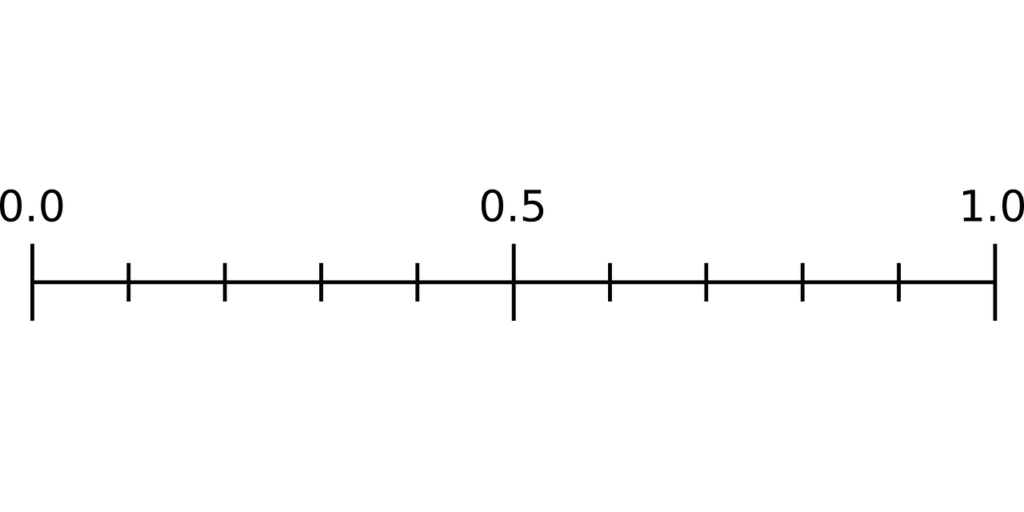

Probability is a fundamental concept in statistics and mathematics that measures the likelihood of a particular event or outcome occurring. It is a numerical value between 0 and 1, with 0 indicating impossibility and 1 indicating certainty. But what does it really mean?

In simple terms, probability represents the chance that something will happen. It allows us to quantify our uncertainty and make informed decisions. Whether you’re playing a game of dice, predicting the weather, or analyzing data, understanding probability is crucial to gaining insights and making accurate predictions.

Probability as a measure of uncertainty

Probability provides a way to quantify uncertainty. It allows us to determine the likelihood of different outcomes based on available information. For example, if there’s a 30% chance of rain tomorrow, we can make decisions about carrying an umbrella or planning outdoor activities accordingly.

By assigning probabilities to different events or outcomes, we can assess the level of uncertainty associated with each possibility. Probability allows us to express our subjective beliefs and determine the level of confidence we have in a particular outcome.

Example of probability calculation

To better understand how probability works, let’s consider a simple example. Imagine you have a bag with five red balls and three blue balls. What is the probability of drawing a red ball from the bag?

Using the concept of probability, we can calculate the probability of drawing a red ball by dividing the number of favorable outcomes (red balls) by the total number of possible outcomes (red and blue balls). In this case, the probability would be 5/8 or 0.625.

This example demonstrates how probability allows us to determine the likelihood of a specific event happening by comparing it to all possible outcomes. It forms the foundation for more complex probability calculations and statistical analyses.

Probability Rules

Addition rule

The addition rule of probability states that the probability of the union of two events can be calculated by adding the individual probabilities of each event and subtracting the probability of their intersection. In other words, if you want to find the probability of either event A or event B occurring, you can sum their individual probabilities and subtract the probability of both events happening simultaneously.

Multiplication rule

The multiplication rule of probability is used to calculate the probability of two events occurring together. It states that the joint probability of two independent events is the product of their individual probabilities. If event A and event B are independent, the probability of both events happening is the product of the probability of event A and the probability of event B.

Complementary rule

The complementary rule of probability involves finding the probability of an event not occurring. It states that the probability of the complement of an event (not event A) is equal to 1 minus the probability of event A. In other words, if you know the probability of event A happening, you can determine the probability of event A not happening by subtracting the probability of event A from 1.

Conditional probability

Conditional probability deals with the probability of an event occurring given that another event has already occurred. It is denoted as P(A|B), which means the probability of event A happening given that event B has occurred. The formula for calculating conditional probability is P(A|B) = P(A∩B) / P(B), where P(A∩B) represents the intersection of events A and B, and P(B) is the probability of event B occurring.

Probability Distributions

Discrete probability distributions

A discrete probability distribution deals with random variables that can only take on specific, isolated values. It is often represented by a probability mass function (PMF), which assigns probabilities to each possible value of the random variable. Examples of discrete probability distributions include the binomial distribution, Poisson distribution, and geometric distribution.

Continuous probability distributions

A continuous probability distribution deals with random variables that can take on any value within a range. Unlike discrete distributions, continuous distributions are represented by a probability density function (PDF). Examples of continuous probability distributions include the normal distribution, exponential distribution, and uniform distribution.

Probability mass function

A probability mass function (PMF) is a function that assigns probabilities to discrete random variables. It describes the probability of each possible outcome occurring. The PMF of a random variable is often represented by a table, graph, or formula. It allows us to calculate the probabilities associated with different events and outcomes in a discrete distribution.

Probability density function

A probability density function (PDF) is a function that describes the probability of a continuous random variable falling within a particular range of values. Unlike a PMF, which assigns probabilities to specific values, a PDF assigns probabilities to intervals or ranges. The area under the curve of a PDF represents the probability of the random variable falling within that range.

Common Probability Distributions

Uniform distribution

The uniform distribution is a type of continuous probability distribution where all outcomes within a given range have an equal probability of occurring. It is often represented by a rectangular-shaped curve. The uniform distribution is commonly used in situations where all outcomes are equally likely, such as rolling a fair die or selecting a random number from a given interval.

Bernoulli distribution

The Bernoulli distribution is a discrete probability distribution that models a single trial with two possible outcomes: a success with probability p or a failure with probability (1-p). It is often used to represent binary events, such as flipping a coin or passing/failing an exam.

Binomial distribution

The binomial distribution is a discrete probability distribution that models the number of successes in a fixed number of independent Bernoulli trials. It is characterized by two parameters: the number of trials (n) and the probability of success in each trial (p).

Normal distribution

The normal distribution, also known as the Gaussian distribution, is a continuous probability distribution that is symmetric and bell-shaped. It is widely used in statistics due to its mathematical properties and its ability to approximate many real-world phenomena. The mean and standard deviation of a normal distribution determine its shape and location.

Conditional Probability

Definition of conditional probability

Conditional probability is a measure of the probability of an event occurring given that another event has already occurred. It allows us to update our probabilities based on new information or conditions. The conditional probability of event A given event B is denoted as P(A|B) and is calculated as the probability of both events A and B occurring divided by the probability of event B.

Calculating conditional probability

To calculate conditional probability, we use the formula P(A|B) = P(A∩B) / P(B), where P(A∩B) represents the probability of both events A and B occurring, and P(B) is the probability of event B occurring. By dividing the joint probability by the probability of the given condition, we can derive the conditional probability.

Bayes’ theorem

Bayes’ theorem is a fundamental probability formula that allows us to update probabilities based on new evidence or information. It provides a framework for incorporating prior knowledge and new data to make more accurate probabilistic predictions. Bayes’ theorem states that the posterior probability of an event A, given evidence B, is equal to the prior probability of A multiplied by the likelihood of B given A, divided by the probability of B.

Probability and Independence

Definition of independence

In probability theory, independence refers to the lack of relationship or dependence between two or more events. If events A and B are independent, the occurrence or non-occurrence of one event does not affect the probability of the other event happening. Independence can be expressed mathematically as P(A∩B) = P(A) x P(B), where P(A∩B) represents the joint probability of events A and B occurring, and P(A) and P(B) are their respective individual probabilities.

Calculating probabilities of independent events

When events are independent, calculating probabilities becomes straightforward. To find the probability of both independent events A and B occurring, we can multiply their individual probabilities: P(A∩B) = P(A) x P(B). This multiplication rule holds true for any number of independent events.

Mutually exclusive events

Mutually exclusive events are events that cannot happen at the same time. If event A occurs, event B cannot occur, and vice versa. In this case, the probability of both events occurring simultaneously is zero. For mutually exclusive events, the addition rule of probability states that the probability of either event A or event B occurring is equal to the sum of their individual probabilities: P(A∪B) = P(A) + P(B).

Expected Value and Variance

Mean and expected value

The mean and expected value are measures of central tendency that quantify the average value of a random variable. The mean is the sum of all possible values of a random variable weighted by their respective probabilities. It provides a measure of where the distribution is centered. Expected value is another term for the mean and represents the long-run average outcome of a random experiment.

Variance and standard deviation

Variance and standard deviation are measures of dispersion that quantify the spread or variability of a random variable. Variance is the average of the squared differences between each value of the random variable and the mean. Standard deviation is the square root of the variance and provides a measure of the average deviation from the mean.

Linearity of expectations

The linearity of expectations is a property that holds for the expected value of linear combinations of random variables. It states that the expected value of the sum of two random variables is equal to the sum of their expected values. Mathematically, E(aX + bY) = aE(X) + bE(Y), where X and Y are random variables, a and b are constants.

Law of Large Numbers

Definition of the law of large numbers

The law of large numbers is a fundamental principle in probability theory that states that as the number of independent trials or observations increases, the average of these observations will converge to the expected value or true probability. In simple terms, the more data we have, the more likely our estimates will match the actual values.

Convergence in probability

Convergence in probability is a concept related to the law of large numbers. It states that as the sample size increases, the probability that the average of the sample converges to the true expected value approaches 1. In other words, the sample mean becomes a more accurate estimate of the population mean as the number of observations increases.

Strong law of large numbers

The strong law of large numbers is a stronger version of the law of large numbers. It states that the sample mean of independent and identically distributed random variables converges to the expected value almost surely. This means that with probability 1, the sample mean will converge to the true expected value as the number of observations approaches infinity.

Hypothesis Testing

Null and alternative hypotheses

Hypothesis testing is a statistical technique used to make inferences or draw conclusions about a population based on sample data. It involves formulating a null hypothesis (H0) and an alternative hypothesis (HA). The null hypothesis represents the assumption of no effect or no difference, while the alternative hypothesis represents the claim or effect we seek evidence for.

Type I and Type II errors

In hypothesis testing, there are two types of errors that can occur. A Type I error occurs when we reject the null hypothesis when it is actually true. This is also known as a false positive. A Type II error occurs when we fail to reject the null hypothesis when it is false. This is also known as a false negative. The goal is to minimize the probability of both types of errors.

Statistical significance

Statistical significance refers to the likelihood of obtaining a result as extreme as, or more extreme than, the observed result assuming the null hypothesis is true. It is typically measured using a p-value. A result is considered statistically significant if the p-value is below a predetermined threshold, often 0.05. A significant result suggests that the observed effect is unlikely to occur by chance alone.

Critical regions

In hypothesis testing, critical regions or critical values represent the range of values that, if observed, would lead to the rejection of the null hypothesis. The critical region is established based on the desired level of significance, or alpha (α). If the test statistic falls within the critical region, the null hypothesis is rejected in favor of the alternative hypothesis.

Applications of Probability in Statistics

Sampling distribution

In statistics, sampling distributions play a crucial role in making inferences about a population based on sample data. The sampling distribution represents the distribution of a statistic, such as the mean or proportion, over many repeated samples. Probability is used to analyze the properties of sampling distributions, such as the mean and variance, and to make estimates and test hypotheses about population parameters.

Central limit theorem

The central limit theorem is a fundamental theorem in probability and statistics. It states that when independent random variables are added, their sum tends to follow a normal distribution, regardless of the shape of the original distribution. This theorem is important because it allows us to approximate the distribution of sample means, proportions, and other statistics using the normal distribution, even if the underlying data is not normally distributed.

Confidence intervals

Confidence intervals provide a way to estimate the range of values within which a population parameter, such as the mean or proportion, is likely to fall. Probability is used to construct confidence intervals by determining the range of values that captures a certain percentage of the sampling distribution. Confidence intervals provide a measure of the precision and uncertainty associated with our estimates.

Regression analysis

Regression analysis is a statistical technique used to model and analyze the relationship between a dependent variable and one or more independent variables. Probability plays a role in regression analysis by providing a framework for estimating coefficients, testing the significance of predictors, and assessing the uncertainty of predictions. Through probability, we can draw conclusions and make predictions based on observed data and statistical models.

In conclusion, probability is a fundamental concept in statistics that allows us to measure uncertainty, make predictions, and draw conclusions based on available data. It provides a framework for analyzing data, constructing models, and making informed decisions. By understanding probability and its various rules and distributions, we can better grasp the complexities of statistics and harness its power in various real-world applications.